Introduction

In modern cloud-native architectures, it’s increasingly common to run workloads in one cloud provider while needing to access resources in another. Whether you’re running a multi-cloud strategy, migrating between providers, or building a distributed system, your Kubernetes pods need secure, passwordless authentication across AWS, Azure, and GCP.

This guide demonstrates how to implement cross-cloud authentication using industry best practices:

- AWS IRSA (IAM Roles for Service Accounts)

- Azure Workload Identity

- GCP Workload Identity Federation

We’ll cover three real-world scenarios:

- Pods running in EKS authenticating to AWS, Azure, and GCP

- Pods running in AKS authenticating to AWS, Azure, and GCP

- Pods running in GKE authenticating to AWS, Azure, and GCP

Important Note: These scenarios rely on Kubernetes Bound Service Account Tokens (available in Kubernetes 1.24+). Legacy auto-mounted tokens will not work for federation.

Table of Contents

- Prerequisites

- Why Use Workload Identity Instead of Static Credentials?

- How Workload Identity Federation Works

- Understanding Token Flow Differences

- Scenario 1: Pods Running in EKS

- Scenario 2: Pods Running in AKS

- Scenario 3: Pods Running in GKE

- Security Best Practices

- Production Hardening

- Performance Considerations

- Comparison Matrix

- Migration Guide

- Conclusion

Prerequisites

Before experimenting with the samples for cross-cloud authentication in this blog post, you’ll need:

Required Tools

kubectl(v1.24+)awsCLI (v2.x) andeksctlazCLI (v2.50+)gcloudCLI (latest)jq(for JSON processing)

Cloud Accounts

- AWS account with appropriate IAM permissions

- Azure subscription with Owner or User Access Administrator role

- GCP project with Owner or IAM Admin role

Cluster Setup

This section provides commands to create Kubernetes clusters on each cloud provider with OIDC/Workload Identity enabled. If you already have clusters, skip to the scenario sections.

# EKS Cluster (~15-20 minutes)export AWS_PROFILE=<your aws profile where you want to create the cluster>eksctl create cluster \ --name my-eks-cluster \ --region us-east-1 \ --nodegroup-name standard-workers \ --node-type t3.medium \ --nodes 2 \ --with-oidc \ --managed# AKS Cluster (~5-10 minutes)# azure login and select the subscription where you want to workaz loginaz group create --name my-aks-rg --location eastus2az aks create \ --resource-group my-aks-rg \ --name my-aks-cluster \ --node-count 2 \ --node-vm-size Standard_D2s_v3 \ --enable-managed-identity \ --enable-oidc-issuer \ --enable-workload-identity \ --network-plugin azure \ --generate-ssh-keysaz aks get-credentials --resource-group my-aks-rg --name my-aks-cluster --file my-aks-cluster.yaml# GKE Cluster (~5-8 minutes)gcloud auth logingcloud config set project YOUR_PROJECT_IDgcloud services enable container.googleapis.comgcloud container clusters create my-gke-cluster \ --region=us-central1 \ --num-nodes=1 \ --enable-ip-alias \ --workload-pool=YOUR_PROJECT_ID.svc.id.goog \ --release-channel=regularKUBECONFIG=my-gke-cluster.yaml gcloud container clusters get-credentials my-gke-cluster --region=us-central1# Verify clusters, make sure your context is set to the newly created clusterskubectl get nodesCluster Cleanup

# Delete EKSeksctl delete cluster --name my-eks-cluster --region us-east-1# Delete AKSaz group delete --name my-aks-rg --yes --no-wait# Delete GKEgcloud container clusters delete my-gke-cluster --region us-central1 --quietWhy Use Workload Identity Instead of Static Credentials?

Traditional approaches using static credentials (API keys, service account keys, access tokens) have significant drawbacks:

- Security risks: Credentials can be leaked, stolen, or compromised

- Rotation complexity: Manual credential rotation is error-prone

- Audit challenges: Difficult to track which workload used which credentials

- Compliance issues: Violates principle of least privilege

Workload identity federation solves these problems by:

✅ No static credentials: Tokens are automatically generated and short-lived

✅ Automatic rotation: No manual intervention required

✅ Fine-grained access control: Each pod gets only the permissions it needs

✅ Better auditability: Cloud provider logs show which Kubernetes service account made the request

✅ Standards-based: Uses OpenID Connect (OIDC) for trust establishment

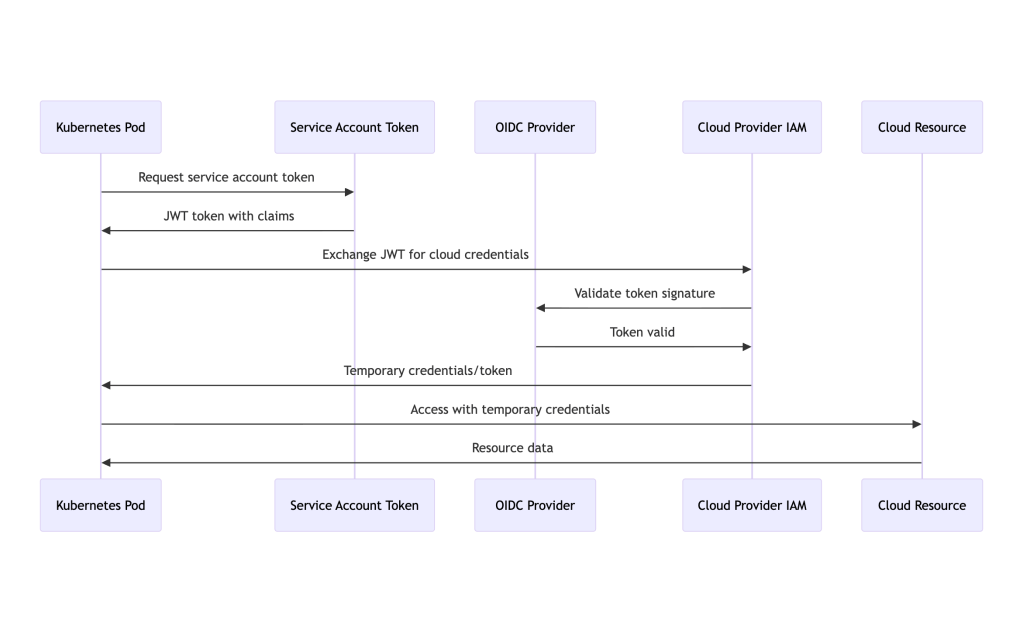

How Workload Identity Federation Works

All three cloud providers use a similar pattern based on OIDC trust:

The flow:

- Pod requests a service account token from Kubernetes

- Kubernetes issues a signed JWT with claims (namespace, service account, audience)

- Pod exchanges this JWT with the cloud provider’s IAM service

- Cloud provider validates the JWT against the OIDC provider

- Cloud provider returns temporary credentials/tokens

- Pod uses these credentials to access cloud resources

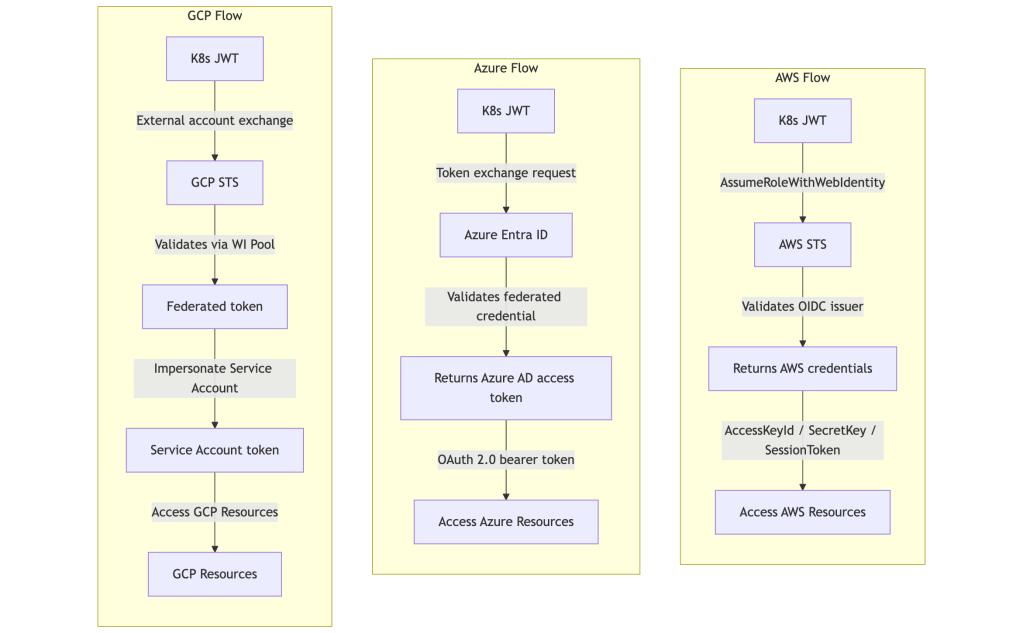

Understanding Token Flow Differences

While all three providers use OIDC federation, their implementation details differ:

| Cloud Provider | Validates OIDC Directly? | Uses STS/Token Service? | Mechanism |

|---|---|---|---|

| AWS | Yes (STS validates OIDC) | Yes (AWS STS) | AssumeRoleWithWebIdentity |

| Azure | Yes (Entra ID validates OIDC) | Yes (Azure AD token endpoint) | Federated credential match → access token |

| GCP | Yes (STS validates via WI Pool) | Yes (GCP STS) | External account → STS → SA impersonation |

Key Differences:

- AWS: Direct OIDC validation via STS, returns temporary AWS credentials (AccessKeyId, SecretAccessKey, SessionToken)

- Azure: Entra ID validates OIDC token against federated credential configuration, returns Azure AD access token (OAuth 2.0 bearer token)

- GCP: Two-step process – STS validates via Workload Identity Pool, then impersonates service account to get access token

Understanding Token Audience in Cross-Cloud Authentication

When authenticating from one cloud provider to other cloud providers, you must configure the token audience claim correctly. Each cloud provider has specific requirements:

Token Audience Best Practices

| Source Cluster | Target Cloud | Recommended Audience | Why |

|---|---|---|---|

| AKS | Azure (native) | Automatic via webhook | Native integration handles this |

| AKS | AWS | sts.amazonaws.com | AWS best practice for STS |

| AKS | GCP | WIF Pool-specific or custom | GCP validates via WIF configuration |

| EKS | AWS (native) | Automatic | Native IRSA integration |

| EKS | Azure | api://AzureADTokenExchange | Azure federated credential requirement |

| EKS | GCP | WIF Pool-specific | GCP standard |

| GKE | GCP (native) | Automatic | Native Workload Identity |

| GKE | AWS | sts.amazonaws.com | AWS best practice |

| GKE | Azure | api://AzureADTokenExchange | Azure requirement |

Approach 1: Dedicated Tokens per Cloud (Recommended for Production)

Use separate projected service account tokens with cloud-specific audiences:

Advantages:

- ✅ Follows each cloud provider’s best practices

- ✅ Clearer audit trails (audience claim shows target cloud)

- ✅ Better security posture (principle of least privilege)

- ✅ Easier troubleshooting (explicit token-to-cloud mapping)

- ✅ No confusion about which cloud a token is for

Implementation:

volumes: - name: aws-token projected: sources: - serviceAccountToken: path: aws-token audience: sts.amazonaws.com - name: azure-token projected: sources: - serviceAccountToken: path: azure-token audience: api://AzureADTokenExchange - name: gcp-token projected: sources: - serviceAccountToken: path: gcp-token audience: //iam.googleapis.com/projects/PROJECT_NUMBER/...Approach 2: Shared Token (Acceptable for Testing/Demos)

Reuse a single token with one audience for multiple clouds:

Use Case: Simplifying demos or when managing multiple projected tokens is impractical

Limitations:

- ⚠️ Violates AWS best practices when using Azure audience

- ⚠️ Less clear in audit logs

- ⚠️ Potential security concerns in highly regulated environments

- ⚠️ May not work in all scenarios (some clouds reject non-standard audiences)

This guide uses Approach 1 (dedicated tokens) for all cross-cloud scenarios to demonstrate production-ready patterns.

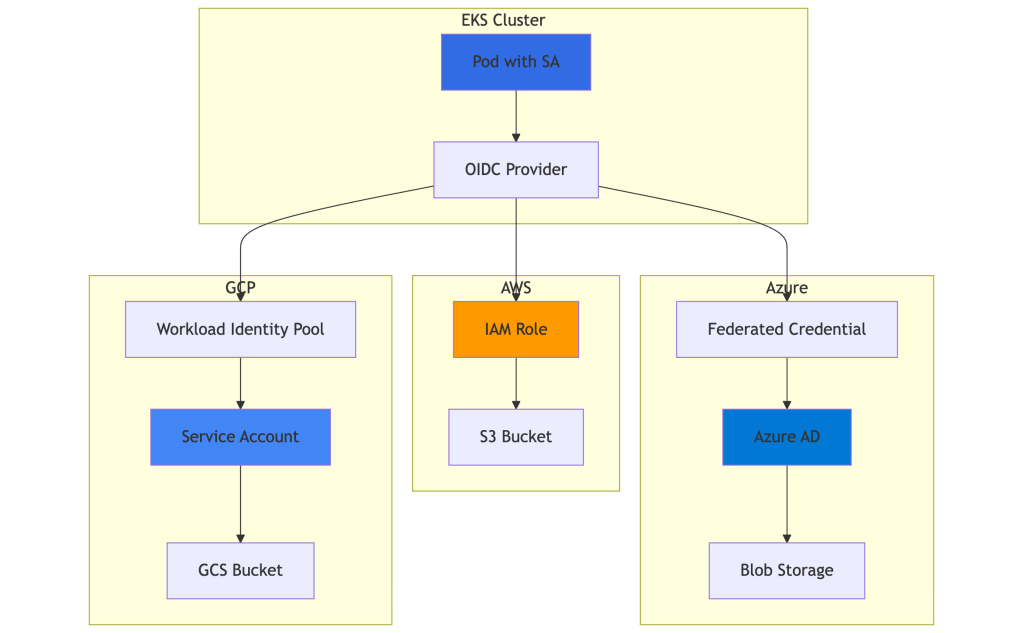

Scenario 1: Pods Running in EKS

Note: After completing this scenario, make sure to clean up the resources using the cleanup steps at the end of this section before proceeding to the next scenario to avoid resource conflicts and unnecessary costs.

Architecture Overview

1.1 Authenticating to AWS (Native IRSA)

Setup Steps:

# 1. Create IAM OIDC provider (if not exists), in our case eks cluster was created with OIDC provider; hence no need# 2. Get OIDC provider URLOIDC_PROVIDER=$(aws eks describe-cluster \ --name my-eks-cluster --region us-east-1 \ --query "cluster.identity.oidc.issuer" \ --output text | sed -e "s/^https:\/\///")# 3. Create IAM role trust policyYOUR_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)cat > trust-policy.json <<EOF{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${YOUR_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_PROVIDER}:sub": "system:serviceaccount:default:eks-cross-cloud-sa", "${OIDC_PROVIDER}:aud": "sts.amazonaws.com" } } } ]}EOF# 4. Create IAM roleaws iam create-role \ --role-name eks-cross-cloud-role \ --assume-role-policy-document file://trust-policy.json# 5. Attach permissions policyaws iam attach-role-policy \ --role-name eks-cross-cloud-role \ --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccessKubernetes Manifest:

Submit the manifest below to validate the Scenario 1.1, if authentication is working you will see success logs as shown below –

# scenario1-1-eks-to-aws.yamlapiVersion: v1kind: ServiceAccountmetadata: name: eks-cross-cloud-sa namespace: default annotations: eks.amazonaws.com/role-arn: arn:aws:iam::YOUR_AWS_ACCOUNT_ID:role/eks-cross-cloud-roleapiVersion: v1kind: Podmetadata: name: eks-aws-test namespace: defaultspec: serviceAccountName: eks-cross-cloud-sa restartPolicy: Never containers: - name: aws-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir boto3 && \ python /app/test_aws_from_eks.py env: - name: AWS_REGION value: us-east-1 volumeMounts: - name: app-code mountPath: /app volumes: - name: app-code configMap: name: aws-test-codeapiVersion: v1kind: ConfigMapmetadata: name: aws-test-code namespace: defaultdata: test_aws_from_eks.py: | # Code will be provided belowTest Code (Python):

# test_aws_from_eks.pyimport boto3import sysdef test_aws_access(): """Test AWS S3 access using IRSA""" try: # SDK automatically uses IRSA credentials s3_client = boto3.client('s3') # List buckets to verify access response = s3_client.list_buckets() print("AWS Authentication successful!") print(f"Found {len(response['Buckets'])} S3 buckets:") for bucket in response['Buckets'][:5]: print(f" - {bucket['Name']}") # Get caller identity sts_client = boto3.client('sts') identity = sts_client.get_caller_identity() print(f"\nAuthenticated as: {identity['Arn']}") return True except Exception as e: print(f"AWS Authentication failed: {str(e)}") return Falseif __name__ == "__main__": success = test_aws_access() sys.exit(0 if success else 1)Success Logs

If you see logs like below for the pods kubectl logs -f -n default eks-aws-test, it means the EKS to AWS Authentication worked.

AWS Authentication successful!Found <number of buckets> S3 buckets: - bucket-1 - bucket-2 - ...Authenticated as: arn:aws:sts::YOUR_AWS_ACCOUNT_ID:assumed-role/eks-cross-cloud-role/botocore-session-<some random number>1.2 Authenticating to Azure from EKS

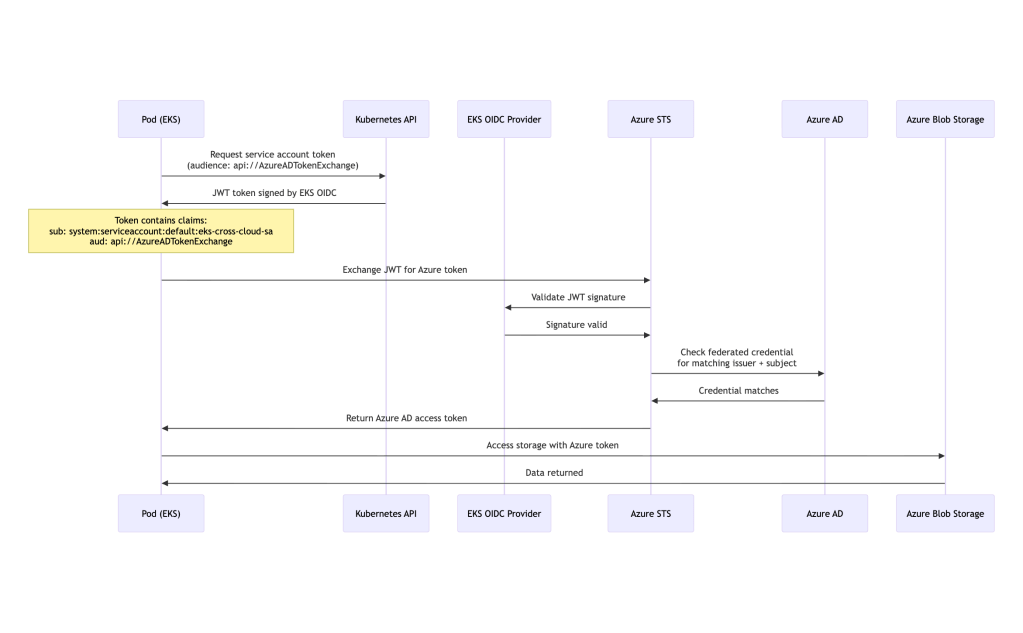

Cross-Cloud Authentication Flow:

Note: We use

api://AzureADTokenExchangeas audience to reuse the projected token across Azure and AWS. In production dedicated to Azure only, this is the standard audience for Azure Workload Identity.

Setup Steps:

# Make sure you have done `az login` and set the subscription you want to work in before proceeding with next steps# 1. Get EKS OIDC issuer URLOIDC_ISSUER=$(aws eks describe-cluster \ --name my-eks-cluster --region us-east-1 \ --query "cluster.identity.oidc.issuer" \ --output text)# 2. Create Azure AD applicationaz ad app create \ --display-name eks-to-azure-appAPP_ID=$(az ad app list \ --display-name eks-to-azure-app \ --query "[0].appId" -o tsv)# 3. Create service principalaz ad sp create --id $APP_IDOBJECT_ID=$(az ad sp show \ --id $APP_ID \ --query id -o tsv)# 4. Create federated credentialcat > federated-credential.json <<EOF{ "name": "eks-federated-identity", "issuer": "${OIDC_ISSUER}", "subject": "system:serviceaccount:default:eks-cross-cloud-sa", "audiences": [ "api://AzureADTokenExchange" ]}EOFaz ad app federated-credential create \ --id $APP_ID \ --parameters federated-credential.json# 5. Assign Azure role (using resource-specific scope for security)SUBSCRIPTION_ID=$(az account show --query id -o tsv)# First create the storage account, then get its resource IDaz group create \ --name eks-cross-cloud \ --location eastus --subscription $SUBSCRIPTION_IDaz storage account create \ --name ekscrosscloud \ --resource-group eks-cross-cloud \ --location eastus \ --sku Standard_LRS \ --kind StorageV2 --subscription $SUBSCRIPTION_ID# Get storage account resource ID for proper scopingSTORAGE_ID=$(az storage account show \ --name ekscrosscloud \ --resource-group eks-cross-cloud \ --query id \ --output tsv)az role assignment create \ --assignee $APP_ID \ --role "Storage Blob Data Reader" \ --scope $STORAGE_ID# 6. Create test containeraz storage container create \ --name test-container \ --account-name ekscrosscloud --subscription $SUBSCRIPTION_ID \ --auth-mode login# find the tenant ID, you will need for yaml manifests belowTENANT_ID=$(az account show --query tenantId -o tsv)Kubernetes Manifest:

Submit the manifest below to validate the Scenario 1.2, if authentication is working you will see success logs as shown below –

# scenario1-2-eks-to-azure.yamlapiVersion: v1kind: Podmetadata: name: eks-azure-test namespace: defaultspec: # eks-cross-cloud-sa SA is created in Scenario 1.1 above serviceAccountName: eks-cross-cloud-sa containers: - name: azure-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir azure-identity azure-storage-blob && \ python /app/test_azure_from_eks.py env: - name: AZURE_CLIENT_ID # replace YOUR_APP_ID with actual value for the app you created above value: "YOUR_APP_ID" - name: AZURE_TENANT_ID # replace YOUR_TENANT_ID with actual value, you can find using `az account show --query tenantId --output tsv` value: "YOUR_TENANT_ID" - name: AZURE_FEDERATED_TOKEN_FILE value: /var/run/secrets/azure/tokens/azure-identity-token volumeMounts: - name: app-code mountPath: /app - name: azure-token mountPath: /var/run/secrets/azure/tokens readOnly: true volumes: - name: app-code configMap: name: azure-test-code - name: azure-token projected: sources: - serviceAccountToken: path: azure-identity-token expirationSeconds: 3600 audience: api://AzureADTokenExchangeapiVersion: v1kind: ConfigMapmetadata: name: azure-test-code namespace: defaultdata: test_azure_from_eks.py: | # Code will be provided belowTest Code (Python):

# test_azure_from_eks.pyimport osfrom azure.identity import WorkloadIdentityCredentialfrom azure.storage.blob import BlobServiceClientimport sysdef test_azure_access(): try: client_id = os.environ.get('AZURE_CLIENT_ID') tenant_id = os.environ.get('AZURE_TENANT_ID') token_file = os.environ.get('AZURE_FEDERATED_TOKEN_FILE') if not all([client_id, tenant_id, token_file]): raise ValueError("Missing required environment variables") credential = WorkloadIdentityCredential( tenant_id=tenant_id, client_id=client_id, token_file_path=token_file ) # if you created your storage account with different name replace ekscrosscloud with your name storage_account_url = "https://ekscrosscloud.blob.core.windows.net" blob_service_client = BlobServiceClient( account_url=storage_account_url, credential=credential ) containers = list(blob_service_client.list_containers(results_per_page=5)) print("✅ Azure Authentication successful!") print(f"Found {len(containers)} containers:") for container in containers: print(f" - {container.name}") return True except Exception as e: print(f"❌ Azure Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_azure_access() sys.exit(0 if success else 1)Success logs:

If you see logs like below for the pods kubectl logs -f -n default eks-azure-test, it means the EKS to Azure Authentication worked.

✅ Azure Authentication successful!Found 1 containers: - test-container1.3 Authenticating to GCP from EKS

Setup Steps:

# 1. Get EKS OIDC issuerOIDC_ISSUER=$(aws eks describe-cluster \ --name my-eks-cluster --region us-east-1 \ --query "cluster.identity.oidc.issuer" \ --output text)# 2. Create Workload Identity Poolgcloud auth logingcloud config set project YOUR_PROJECT_IDgcloud iam workload-identity-pools create eks-pool \ --location=global \ --display-name="EKS Pool"# 3. Create Workload Identity ProviderPROJECT_ID=$(gcloud config get-value project)PROJECT_NUMBER=$(gcloud projects describe ${PROJECT_ID} \ --format="value(projectNumber)")gcloud iam workload-identity-pools providers create-oidc eks-provider \ --location=global \ --workload-identity-pool=eks-pool \ --issuer-uri="${OIDC_ISSUER}" \ --allowed-audiences="//iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/eks-pool/providers/eks-provider" \ --attribute-mapping="google.subject=assertion.sub,attribute.namespace=assertion['kubernetes.io']['namespace'],attribute.service_account=assertion['kubernetes.io']['serviceaccount']['name']" \ --attribute-condition="assertion.sub.startsWith('system:serviceaccount:default:eks-cross-cloud-sa')"# 4. Create GCP Service Accountgcloud iam service-accounts create eks-gcp-sa \ --display-name="EKS to GCP Service Account"GSA_EMAIL="eks-gcp-sa@${PROJECT_ID}.iam.gserviceaccount.com"# 5. Create bucket and Grant GCS permissionsgcloud storage buckets create gs://eks-cross-cloud \ --project=${PROJECT_ID} \ --location=us-central1 \ --uniform-bucket-level-accessgsutil iam ch serviceAccount:${GSA_EMAIL}:objectViewer gs://eks-cross-cloud# list buckets in the project:gcloud projects add-iam-policy-binding ${PROJECT_ID} \ --member="serviceAccount:${GSA_EMAIL}" \ --role="roles/storage.admin"# 6. Allow Kubernetes SA to impersonate GCP SAgcloud iam service-accounts add-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.workloadIdentityUser \ --member="principalSet://iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/eks-pool/attribute.service_account/eks-cross-cloud-sa"Kubernetes Manifest:

Submit the manifest below to validate the Scenario 1.2, if authentication is working you will see success logs as shown below –

# scenario1-3-eks-to-gcp.yamlapiVersion: v1kind: Podmetadata: name: eks-gcp-test namespace: defaultspec: # eks-cross-cloud-sa SA is created in Scenario 1.1 above serviceAccountName: eks-cross-cloud-sa restartPolicy: Never containers: - name: gcp-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir google-auth google-cloud-storage && \ python /app/test_gcp_from_eks.py env: - name: GOOGLE_APPLICATION_CREDENTIALS value: /var/run/secrets/workload-identity/config.json - name: GCP_PROJECT_ID # replace YOUR_PROJECT_ID with actual value value: "YOUR_PROJECT_ID" volumeMounts: - name: workload-identity-config mountPath: /var/run/secrets/workload-identity readOnly: true - name: ksa-token mountPath: /var/run/secrets/tokens readOnly: true - name: app-code mountPath: /app readOnly: true volumes: - name: workload-identity-config configMap: name: gcp-workload-identity-config - name: app-code configMap: name: gcp-test-code - name: ksa-token projected: sources: - serviceAccountToken: path: eks-token expirationSeconds: 3600 # replace PROJECT_NUMBER with actual value audience: "//iam.googleapis.com/projects/PROJECT_NUMBER/locations/global/workloadIdentityPools/eks-pool/providers/eks-provider"apiVersion: v1kind: ConfigMapmetadata: name: gcp-workload-identity-config namespace: defaultdata: # replace YOUR_PROJECT_ID and PROJECT_NUMBER with actual values config.json: | { "type": "external_account", "audience": "//iam.googleapis.com/projects/PROJECT_NUMBER/locations/global/workloadIdentityPools/eks-pool/providers/eks-provider", "subject_token_type": "urn:ietf:params:oauth:token-type:jwt", "token_url": "https://sts.googleapis.com/v1/token", "service_account_impersonation_url": "https://iamcredentials.googleapis.com/v1/projects/-/serviceAccounts/eks-gcp-sa@YOUR_PROJECT_ID.iam.gserviceaccount.com:generateAccessToken", "credential_source": { "file": "/var/run/secrets/tokens/eks-token" } }apiVersion: v1kind: ConfigMapmetadata: name: gcp-test-code namespace: defaultdata: test_gcp_from_eks.py: | # Code will be provided belowTest Code (Python):

# test_gcp_from_eks.pyimport osfrom google.auth import defaultfrom google.cloud import storageimport sysdef test_gcp_access(): try: credentials, project = default() storage_client = storage.Client( credentials=credentials, project=os.environ.get('GCP_PROJECT_ID') ) buckets = list(storage_client.list_buckets(max_results=5)) print("GCP Authentication successful!") print(f"Found {len(buckets)} GCS buckets:") for bucket in buckets: print(f" - {bucket.name}") print(f"\nAuthenticated with project: {project}") return True except Exception as e: print(f"GCP Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_gcp_access() sys.exit(0 if success else 1)Success logs:

If you see logs like below for the pods kubectl logs -f -n default eks-gcp-test, it means the EKS to GCP Authentication worked.

GCP Authentication successful!Found <number> GCS buckets: - bucket-1 - bucket-2 - ...Authenticated with project: NoneImportant Note:

project: Nonein the output is expected when using external account credentials. The active project is determined by the client configuration, not the credential itself.

Scenario 1 Cleanup

After testing Scenario 1 (EKS cross-cloud authentication), clean up the resources:

# ============================================# AWS Resources Cleanup# ============================================# Delete IAM role policy attachmentsaws iam detach-role-policy \ --role-name eks-cross-cloud-role \ --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess# Delete IAM roleaws iam delete-role --role-name eks-cross-cloud-role# Note: OIDC provider will be deleted when EKS cluster is deleted# ============================================# Azure Resources Cleanup# ============================================# Get App IDAPP_ID=$(az ad app list \ --display-name eks-to-azure-app \ --query "[0].appId" -o tsv)# Delete role assignmentsSUBSCRIPTION_ID=$(az account show --query id -o tsv)az role assignment delete \ --assignee $APP_ID \ --scope "/subscriptions/$SUBSCRIPTION_ID"# Delete federated credentialsaz ad app federated-credential delete \ --id $APP_ID \ --federated-credential-id eks-federated-identity# Delete service principalaz ad sp delete --id $APP_ID# Delete app registrationaz ad app delete --id $APP_ID# Delete the resource groupaz group delete --name eks-cross-cloud --subscription $SUBSCRIPTION_ID --yes --no-wait# ============================================# GCP Resources Cleanup# ============================================PROJECT_ID=$(gcloud config get-value project)PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")GSA_EMAIL="eks-gcp-sa@${PROJECT_ID}.iam.gserviceaccount.com"# Remove IAM policy bindinggcloud iam service-accounts remove-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.workloadIdentityUser \ --member="principalSet://iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/eks-pool/attribute.service_account/eks-cross-cloud-sa" \ --quiet# Delete bucketgcloud storage buckets delete gs://eks-cross-cloud# Remove GCS bucket permissions (if you granted any)gsutil iam ch -d serviceAccount:${GSA_EMAIL}:objectViewer gs://eks-cross-cloudgcloud projects remove-iam-policy-binding ${PROJECT_ID} \ --member="serviceAccount:${GSA_EMAIL}" \ --role="roles/storage.admin"# Delete GCP service accountgcloud iam service-accounts delete ${GSA_EMAIL} --quiet# Delete workload identity providergcloud iam workload-identity-pools providers delete eks-provider \ --location=global \ --workload-identity-pool=eks-pool \ --quiet# Delete workload identity poolgcloud iam workload-identity-pools delete eks-pool \ --location=global \ --quiet# ============================================# Kubernetes Resources Cleanup# ============================================# Delete test podskubectl delete pod eks-aws-test --force --ignore-not-foundkubectl delete pod eks-azure-test --force --ignore-not-foundkubectl delete pod eks-gcp-test --force --ignore-not-found# Delete ConfigMapskubectl delete configmap aws-test-code --ignore-not-foundkubectl delete configmap azure-test-code --ignore-not-foundkubectl delete configmap gcp-workload-identity-config --ignore-not-foundkubectl delete configmap gcp-test-code --ignore-not-found# Delete service accountkubectl delete serviceaccount eks-cross-cloud-sa --ignore-not-foundScenario 2: Pods Running in AKS

Note: After completing this scenario, make sure to clean up the resources using the cleanup steps at the end of this section before proceeding to the next scenario to avoid resource conflicts and unnecessary costs.

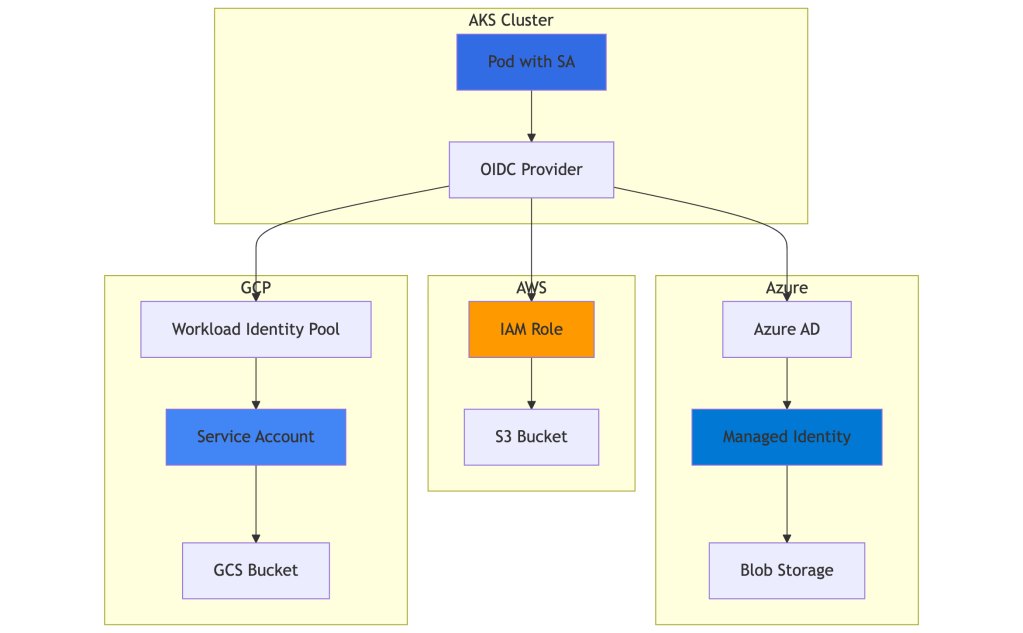

Architecture Overview

2.1 Authenticating to Azure (Native Workload Identity)

Setup Steps:

# 1. Enable OIDC issuer on AKS clusteraz aks update \ --resource-group my-aks-rg \ --name my-aks-cluster \ --enable-oidc-issuer \ --enable-workload-identity# 2. Get OIDC issuer URLOIDC_ISSUER=$(az aks show \ --resource-group my-aks-rg \ --name my-aks-cluster \ --query "oidcIssuerProfile.issuerUrl" -o tsv)# 3. Create managed identityaz identity create \ --name aks-cross-cloud-identity \ --resource-group my-aks-rgCLIENT_ID=$(az identity show \ --name aks-cross-cloud-identity \ --resource-group my-aks-rg \ --query clientId -o tsv)# 4. Create federated credentialaz identity federated-credential create \ --name aks-federated-credential \ --identity-name aks-cross-cloud-identity \ --resource-group my-aks-rg \ --issuer "${OIDC_ISSUER}" \ --subject system:serviceaccount:default:aks-cross-cloud-sa# 5. Assign permissions (e.g., Storage Blob Data Reader)SUBSCRIPTION_ID=$(az account show --query id -o tsv)az role assignment create \ --assignee $CLIENT_ID \ --role "Storage Blob Data Reader" \ --scope "/subscriptions/$SUBSCRIPTION_ID"# 6. Create Storage Accountaz storage account create \ --name akscrosscloud \ --resource-group my-aks-rg \ --location eastus2 \ --sku Standard_LRS \ --kind StorageV2 \ --min-tls-version TLS1_2# 7. Create Blob Containeraz storage container create \ --name test-container \ --account-name akscrosscloud \ --auth-mode login# 8. Get Storage Account Resource ID (for proper RBAC scope)STORAGE_ID=$(az storage account show \ --name akscrosscloud \ --resource-group my-aks-rg \ --query id -o tsv)Kubernetes Manifest:

Submit the manifest below to validate the Scenario 2.1, if authentication is working you will see success logs as shown below –

apiVersion: v1kind: ServiceAccountmetadata: name: aks-cross-cloud-sa namespace: default annotations: azure.workload.identity/client-id: "YOUR_CLIENT_ID" labels: azure.workload.identity/use: "true"apiVersion: v1kind: Podmetadata: name: aks-azure-test namespace: default labels: azure.workload.identity/use: "true"spec: serviceAccountName: aks-cross-cloud-sa containers: - name: azure-test image: python:3.11-slim command: ['sh', '-c', 'pip install --no-cache-dir azure-identity azure-storage-blob && python /app/test_azure_from_aks.py'] env: - name: AZURE_STORAGE_ACCOUNT value: "YOUR_STORAGE_ACCOUNT" volumeMounts: - name: app-code mountPath: /app volumes: - name: app-code configMap: name: azure-test-code-aksapiVersion: v1kind: ConfigMapmetadata: name: azure-test-code-aks namespace: defaultdata: test_azure_from_aks.py: | # Code belowTest Code (Python):

# test_azure_from_aks.pyfrom azure.identity import DefaultAzureCredentialfrom azure.storage.blob import BlobServiceClientimport osimport sysdef test_azure_access(): """Test Azure Blob Storage access using native AKS Workload Identity""" try: # DefaultAzureCredential automatically detects workload identity credential = DefaultAzureCredential() storage_account = os.environ.get('AZURE_STORAGE_ACCOUNT') account_url = f"https://{storage_account}.blob.core.windows.net" blob_service_client = BlobServiceClient( account_url=account_url, credential=credential ) # List containers (remove max_results parameter) containers = list(blob_service_client.list_containers()) print("✅ Azure Authentication successful!") print(f"Found {len(containers)} containers:") for container in containers[:5]: # Limit display to first 5 print(f" - {container.name}") return True except Exception as e: print(f"❌ Azure Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_azure_access() sys.exit(0 if success else 1)Success logs:

If you see logs like below for the pods kubectl logs -f -n default aks-azure-test, it means the AKS to Azure Authentication worked.

✅ Azure Authentication successful!Found 1 containers: - test-container2.2 Authenticating to AWS from AKS

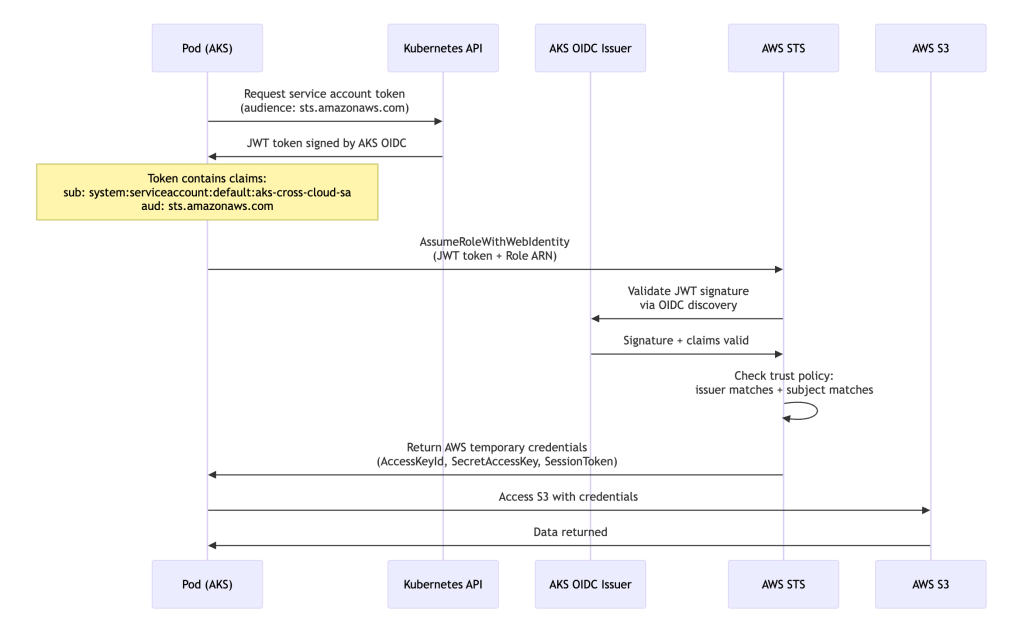

Cross-Cloud Authentication Flow:

Setup Steps:

# 1. Get AKS OIDC issuerOIDC_ISSUER=$(az aks show \ --resource-group my-aks-rg \ --name my-aks-cluster \ --query "oidcIssuerProfile.issuerUrl" -o tsv)# Remove https:// prefix for IAMOIDC_PROVIDER=$(echo $OIDC_ISSUER | sed -e "s/^https:\/\///")# 2. Create OIDC provider in AWSexport AWS_PROFILE=<set to aws profile where you want to create this oidc provider in aws># Extract just the hostname from OIDC_ISSUEROIDC_HOST=$(echo $OIDC_ISSUER | sed 's|https://||' | sed 's|/.*||')# Get the thumbprintTHUMBPRINT=$(echo | openssl s_client -servername $OIDC_HOST -connect $OIDC_HOST:443 -showcerts 2>/dev/null \ | openssl x509 -fingerprint -sha1 -noout \ | sed 's/SHA1 Fingerprint=//;s/://g')# Create the OIDC provideraws iam create-open-id-connect-provider \ --url $OIDC_ISSUER \ --client-id-list sts.amazonaws.com \ --thumbprint-list $THUMBPRINT# 3. Create trust policyYOUR_AWS_ACCOUNT_ID=$(aws sts get-caller-identity | jq -r .Account)cat > aks-aws-trust-policy.json <<EOF{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${YOUR_AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_PROVIDER}:sub": "system:serviceaccount:default:aks-cross-cloud-sa", "${OIDC_PROVIDER}:aud": "sts.amazonaws.com" } } } ]}EOF# 4. Create IAM roleaws iam create-role \ --role-name aks-to-aws-role \ --assume-role-policy-document file://aks-aws-trust-policy.json# 5. Attach permissionsaws iam attach-role-policy \ --role-name aks-to-aws-role \ --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccessKubernetes Manifest:

Submit the manifest below to validate the Scenario 2.2, if authentication is working you will see success logs as shown below –

# scenario2-2-aks-to-aws.yamlapiVersion: v1kind: Podmetadata: name: aks-aws-test namespace: defaultspec: # aks-cross-cloud-sa SA is created scenario 2.1 serviceAccountName: aks-cross-cloud-sa containers: - name: aws-test image: python:3.11-slim command: ['sh', '-c', 'pip install boto3 && python /app/test_aws_from_aks.py'] env: - name: AWS_ROLE_ARN # replace YOUR_AWS_ACCOUNT_ID with aws account number where you create the IAM Role value: "arn:aws:iam::YOUR_AWS_ACCOUNT_ID:role/aks-to-aws-role" - name: AWS_WEB_IDENTITY_TOKEN_FILE value: /var/run/secrets/aws/tokens/aws-token - name: AWS_REGION value: us-east-1 volumeMounts: - name: app-code mountPath: /app - name: aws-token mountPath: /var/run/secrets/aws/tokens readOnly: true volumes: - name: app-code configMap: name: aws-test-code-aks - name: aws-token projected: sources: - serviceAccountToken: path: aws-token expirationSeconds: 3600 audience: sts.amazonaws.comapiVersion: v1kind: ConfigMapmetadata: name: aws-test-code-aks namespace: defaultdata: test_aws_from_aks.py: | # Code belowImportant Note: We use

sts.amazonaws.comas the audience for AWS authentication, which is the AWS best practice. This creates a dedicated token specifically for AWS, separate from the Azure token used in Scenario 2.1.

Test Code (Python):

# test_aws_from_aks.pyimport boto3import osimport sysdef test_aws_access(): """Test AWS S3 access from AKS using Web Identity""" try: # boto3 automatically uses AWS_WEB_IDENTITY_TOKEN_FILE and AWS_ROLE_ARN s3_client = boto3.client('s3') # List buckets response = s3_client.list_buckets() print("✅ AWS Authentication successful!") print(f"Found {len(response['Buckets'])} S3 buckets:") for bucket in response['Buckets'][:5]: print(f" - {bucket['Name']}") # Get caller identity sts_client = boto3.client('sts') identity = sts_client.get_caller_identity() print(f"\n🔐 Authenticated as: {identity['Arn']}") return True except Exception as e: print(f"❌ AWS Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_aws_access() sys.exit(0 if success else 1)Success logs:

If you see logs like below for the pods kubectl logs -f -n default aks-aws-test, it means the AKS to AWS Authentication worked.

✅ AWS Authentication successful!Found <number of buckets> S3 buckets: - bucket-1 - bucket-2 - ...🔐 Authenticated as: arn:aws:sts::YOUR_AWS_ACCOUNT_ID:assumed-role/aks-to-aws-role/botocore-session-<some random number>2.3 Authenticating to GCP from AKS

Setup Steps:

# 1. Get AKS OIDC issuerOIDC_ISSUER=$(az aks show \ --resource-group my-aks-rg \ --name my-aks-cluster \ --query "oidcIssuerProfile.issuerUrl" -o tsv)# 2. Set up GCP projectgcloud auth logingcloud config set project YOUR_PROJECT_IDPROJECT_ID=$(gcloud config get-value project)PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")# 3. Create Workload Identity Pool in GCPgcloud iam workload-identity-pools create aks-pool \ --location=global \ --display-name="AKS Pool"# 4. Create OIDC provider (CORRECT audience pattern)gcloud iam workload-identity-pools providers create-oidc aks-provider \ --location=global \ --workload-identity-pool=aks-pool \ --issuer-uri="${OIDC_ISSUER}" \ --allowed-audiences="//iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/aks-pool/providers/aks-provider" \ --attribute-mapping="google.subject=assertion.sub,attribute.service_account=assertion.sub" \ --attribute-condition="assertion.sub.startsWith('system:serviceaccount:default:aks-cross-cloud-sa')"# 5. Create GCP Service Accountgcloud iam service-accounts create aks-gcp-sa \ --display-name="AKS to GCP Service Account"GSA_EMAIL="aks-gcp-sa@${PROJECT_ID}.iam.gserviceaccount.com"echo "Service Account: ${GSA_EMAIL}"# 6. Create bucketgcloud storage buckets create gs://aks-cross-cloud \ --project=${PROJECT_ID} \ --location=us-central1 \ --uniform-bucket-level-access# 7. Grant GCS permissions to service accountgcloud projects add-iam-policy-binding ${PROJECT_ID} \ --member="serviceAccount:${GSA_EMAIL}" \ --role="roles/storage.admin"# 8. Grant bucket-specific permissions (optional, redundant with storage.admin)gsutil iam ch serviceAccount:${GSA_EMAIL}:objectViewer gs://aks-cross-cloud# 9. Allow workload identity to impersonate - METHOD 1 (using principalSet)# Add the correct bindings with full subject pathgcloud iam service-accounts add-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.workloadIdentityUser \ --member="principalSet://iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/aks-pool/attribute.service_account/system:serviceaccount:default:aks-cross-cloud-sa"gcloud iam service-accounts add-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.serviceAccountTokenCreator \ --member="principalSet://iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/aks-pool/attribute.service_account/system:serviceaccount:default:aks-cross-cloud-sa"Kubernetes Manifest:

Submit the manifest below to validate the Scenario 2.3, if authentication is working you will see success logs as shown below –

# scenario2-3-aks-to-gcp.yamlapiVersion: v1kind: Podmetadata: name: aks-gcp-test namespace: defaultspec: serviceAccountName: aks-cross-cloud-sa containers: - name: gcp-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir google-auth google-cloud-storage && \ python /app/test_gcp_from_aks.py env: - name: GOOGLE_APPLICATION_CREDENTIALS value: /var/run/secrets/workload-identity/config.json - name: GCP_PROJECT_ID value: "YOUR_PROJECT_ID" # Replace with actual project ID volumeMounts: - name: workload-identity-config mountPath: /var/run/secrets/workload-identity - name: app-code mountPath: /app - name: azure-identity-token mountPath: /var/run/secrets/azure/tokens readOnly: true volumes: - name: workload-identity-config configMap: name: gcp-workload-identity-config-aks - name: app-code configMap: name: gcp-test-code-aks - name: azure-identity-token projected: sources: - serviceAccountToken: path: azure-identity-token expirationSeconds: 3600 audience: "//iam.googleapis.com/projects/YOUR_PROJECT_NUMBER/locations/global/workloadIdentityPools/aks-pool/providers/aks-provider"apiVersion: v1kind: ConfigMapmetadata: name: gcp-workload-identity-config-aks namespace: defaultdata: config.json: | { "type": "external_account", "audience": "//iam.googleapis.com/projects/YOUR_PROJECT_NUMBER/locations/global/workloadIdentityPools/aks-pool/providers/aks-provider", "subject_token_type": "urn:ietf:params:oauth:token-type:jwt", "token_url": "https://sts.googleapis.com/v1/token", "service_account_impersonation_url": "https://iamcredentials.googleapis.com/v1/projects/-/serviceAccounts/aks-gcp-sa@YOUR_PROJECT_ID.iam.gserviceaccount.com:generateAccessToken", "credential_source": { "file": "/var/run/secrets/azure/tokens/azure-identity-token" } }apiVersion: v1kind: ConfigMapmetadata: name: gcp-test-code-aks namespace: defaultdata: test_gcp_from_aks.py: | # Code belowTest Code (Python):

# test_gcp_from_aks.pyimport osfrom google.auth import defaultfrom google.cloud import storageimport sysdef test_gcp_access(): try: credentials, project = default() storage_client = storage.Client( credentials=credentials, project=os.environ.get('GCP_PROJECT_ID') ) buckets = list(storage_client.list_buckets(max_results=5)) print("GCP Authentication successful!") print(f"Found {len(buckets)} GCS buckets:") for bucket in buckets: print(f" - {bucket.name}") print(f"\nAuthenticated with project: {project}") return True except Exception as e: print(f"GCP Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_gcp_access() sys.exit(0 if success else 1)Success logs:

If you see logs like below for the pods kubectl logs -f -n default aks-gcp-test, it means the AKS to GCP Authentication worked.

GCP Authentication successful!Found <number of buckets> GCS buckets: - bucket-1 - bucket-2 - aks-cross-cloud - ...Authenticated with project: NoneScenario 2 Cleanup

After testing Scenario 2 (AKS cross-cloud authentication), clean up the resources:

# ============================================# Azure Resources Cleanup# ============================================RESOURCE_GROUP="my-aks-rg"IDENTITY_NAME="aks-cross-cloud-identity"# Get managed identity client IDCLIENT_ID=$(az identity show \ --name $IDENTITY_NAME \ --resource-group $RESOURCE_GROUP \ --query clientId -o tsv)# Delete role assignmentsSUBSCRIPTION_ID=$(az account show --query id -o tsv)az role assignment delete \ --assignee $CLIENT_ID \ --scope "/subscriptions/$SUBSCRIPTION_ID"# Delete federated credentialaz identity federated-credential delete \ --name aks-federated-credential \ --identity-name $IDENTITY_NAME \ --resource-group $RESOURCE_GROUP# Delete managed identityaz identity delete \ --name $IDENTITY_NAME \ --resource-group $RESOURCE_GROUP# Delete storage accountaz storage account delete \ --name akscrosscloud \ --resource-group $RESOURCE_GROUP \ --yes# ============================================# AWS Resources Cleanup# ============================================# Get OIDC provider ARNOIDC_ISSUER=$(az aks show \ --resource-group $RESOURCE_GROUP \ --name my-aks-cluster \ --query "oidcIssuerProfile.issuerUrl" -o tsv)OIDC_PROVIDER=$(echo $OIDC_ISSUER | sed -e "s/^https:\/\///")# Delete IAM role policy attachmentsaws iam detach-role-policy \ --role-name aks-to-aws-role \ --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess# Delete IAM roleaws iam delete-role --role-name aks-to-aws-role# Delete OIDC providerACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)aws iam delete-open-id-connect-provider \ --open-id-connect-provider-arn "arn:aws:iam::${ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"# ============================================# GCP Resources Cleanup# ============================================PROJECT_ID=$(gcloud config get-value project)PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")GSA_EMAIL="aks-gcp-sa@${PROJECT_ID}.iam.gserviceaccount.com"# Remove IAM policy bindinggcloud iam service-accounts remove-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.workloadIdentityUser \ --member="principalSet://iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/aks-pool/attribute.service_account/system:serviceaccount:default:aks-cross-cloud-sa"gcloud iam service-accounts remove-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.serviceAccountTokenCreator \ --member="principalSet://iam.googleapis.com/projects/${PROJECT_NUMBER}/locations/global/workloadIdentityPools/aks-pool/attribute.service_account/system:serviceaccount:default:aks-cross-cloud-sa"# Remove GCS bucket permissions (if you granted any)gsutil iam ch -d serviceAccount:${GSA_EMAIL}:objectViewer gs://aks-cross-cloudgcloud projects remove-iam-policy-binding ${PROJECT_ID} \ --member="serviceAccount:${GSA_EMAIL}" \ --role="roles/storage.admin"# Delete GCP service accountgcloud iam service-accounts delete ${GSA_EMAIL} --quiet# Delete workload identity providergcloud iam workload-identity-pools providers delete aks-provider \ --location=global \ --workload-identity-pool=aks-pool \ --quiet# Delete workload identity poolgcloud iam workload-identity-pools delete aks-pool \ --location=global \ --quiet# Delete gcp bucketgcloud storage buckets delete gs://aks-cross-cloud --quiet# ============================================# Kubernetes Resources Cleanup# ============================================# Delete test podskubectl delete pod aks-azure-test --force --ignore-not-foundkubectl delete pod aks-aws-test --force --ignore-not-foundkubectl delete pod aks-gcp-test --force --ignore-not-found# Delete ConfigMapskubectl delete configmap azure-test-code-aks --ignore-not-foundkubectl delete configmap aws-test-code-aks --ignore-not-foundkubectl delete configmap gcp-workload-identity-config-aks --ignore-not-foundkubectl delete configmap gcp-test-code-aks --ignore-not-found# Delete service accountkubectl delete serviceaccount aks-cross-cloud-sa --ignore-not-foundScenario 3: Pods Running in GKE

Note: After completing this scenario, make sure to clean up the resources using the cleanup steps at the end of this section.

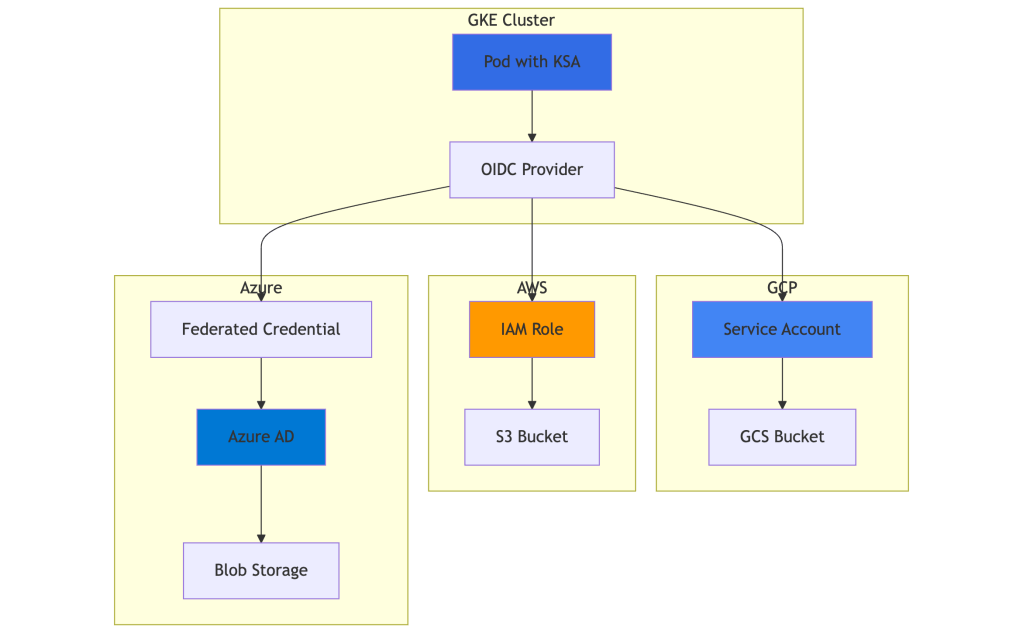

Architecture Overview

3.1 Authenticating to GCP (Native Workload Identity)

Important: In GKE native Workload Identity, Google handles token exchange automatically. No projected token or external_account JSON is required—this is a key difference from EKS/AKS cross-cloud scenarios.

Setup Steps:

# 1. Enable Workload Identity on GKE cluster (if not already enabled), in our case we did so we can skip thisPROJECT_ID=$(gcloud config get-value project)#gcloud container clusters update my-gke-cluster \# --region=us-central1 \# --workload-pool=${PROJECT_ID}.svc.id.goog# 2. Create GCP Service Accountgcloud iam service-accounts create gke-cross-cloud-sa \ --display-name="GKE Cross Cloud Service Account"GSA_EMAIL="gke-cross-cloud-sa@${PROJECT_ID}.iam.gserviceaccount.com"# 3. Create GCS bucketgcloud storage buckets create gs://gke-cross-cloud \ --project=${PROJECT_ID} \ --location=us-central1 \ --uniform-bucket-level-access# 4. Grant GCS permissions to service accountgcloud projects add-iam-policy-binding ${PROJECT_ID} \ --member="serviceAccount:${GSA_EMAIL}" \ --role="roles/storage.admin"gsutil iam ch serviceAccount:${GSA_EMAIL}:objectViewer gs://gke-cross-cloud# 5. Bind Kubernetes SA to GCP SAgcloud iam service-accounts add-iam-policy-binding ${GSA_EMAIL} \ --role roles/iam.workloadIdentityUser \ --member "serviceAccount:${PROJECT_ID}.svc.id.goog[default/gke-cross-cloud-sa]"Kubernetes Manifest:

Submit the manifest below to validate the Scenario 3.1, if authentication is working you will see success logs as shown below –

# scenario3-1-gke-to-gcp.yamlapiVersion: v1kind: ServiceAccountmetadata: name: gke-cross-cloud-sa namespace: default annotations: iam.gke.io/gcp-service-account: gke-cross-cloud-sa@YOUR_PROJECT_ID.iam.gserviceaccount.comapiVersion: v1kind: Podmetadata: name: gke-gcp-test namespace: defaultspec: serviceAccountName: gke-cross-cloud-sa restartPolicy: Never containers: - name: gcp-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir google-auth google-cloud-storage && \ python /app/test_gcp_from_gke.py env: - name: GCP_PROJECT_ID # Replace with your actual project ID value: "YOUR_PROJECT_ID" volumeMounts: - name: app-code mountPath: /app volumes: - name: app-code configMap: name: gcp-test-code-gkeapiVersion: v1kind: ConfigMapmetadata: name: gcp-test-code-gke namespace: defaultdata: test_gcp_from_gke.py: | # Code will be provided belowTest Code (Python):

# test_gcp_from_gke.pyfrom google.cloud import storagefrom google.auth import defaultimport osimport sysdef test_gcp_access(): """Test GCP GCS access using native GKE Workload Identity""" try: # Automatically uses workload identity credentials, project = default() storage_client = storage.Client( credentials=credentials, project=os.environ.get('GCP_PROJECT_ID') ) # List buckets buckets = list(storage_client.list_buckets(max_results=5)) print("✅ GCP Authentication successful!") print(f"Found {len(buckets)} GCS buckets:") for bucket in buckets: print(f" - {bucket.name}") print(f"\n🔐 Authenticated with project: {project}") return True except Exception as e: print(f"❌ GCP Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_gcp_access() sys.exit(0 if success else 1)Success Logs:

If you see logs like below for the pod kubectl logs -f -n default gke-gcp-test, it means the GKE to GCP Authentication worked.

✅ GCP Authentication successful!Found <number> GCS buckets: - bucket-1 - bucket-2 - gke-cross-cloud - ...🔐 Authenticated with project: YOUR_PROJECT_ID3.2 Authenticating to AWS from GKE

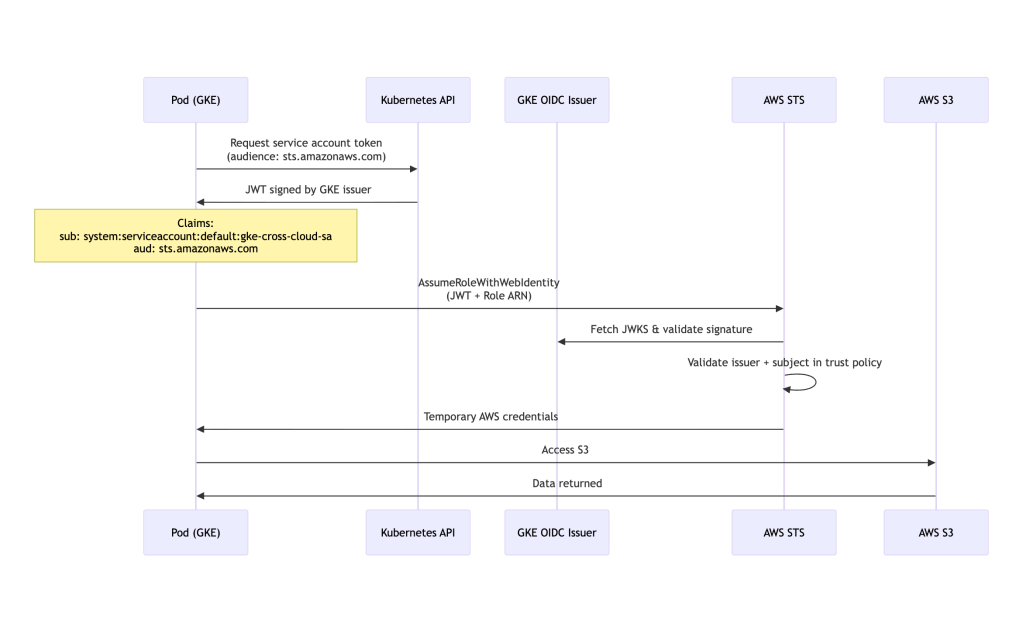

Cross-Cloud Authentication Flow:

Setup Steps:

# 1. Get GKE OIDC provider URLPROJECT_ID=$(gcloud config get-value project)CLUSTER_LOCATION="us-central1" # Change to your cluster location (region or zone)# Get the full OIDC issuer URLOIDC_ISSUER=$(curl -s https://container.googleapis.com/v1/projects/YOUR_PROJECT_ID/locations/us-central1/clusters/my-gke-cluster/.well-known/openid-configuration | jq -r .issuer)echo "OIDC Issuer: ${OIDC_ISSUER}"# 2. Create OIDC provider in AWSexport AWS_PROFILE=<set to aws profile where you want to create this>OIDC_PROVIDER=$(echo $OIDC_ISSUER | sed -e "s/^https:\/\///")# Extract hostname for thumbprintOIDC_HOST=$(echo $OIDC_ISSUER | sed 's|https://||' | sed 's|/.*||')# Get the thumbprintTHUMBPRINT=$(echo | openssl s_client -servername ${OIDC_HOST} -connect ${OIDC_HOST}:443 -showcerts 2>/dev/null \ | openssl x509 -fingerprint -sha1 -noout \ | sed 's/SHA1 Fingerprint=//;s/://g')echo "Thumbprint: ${THUMBPRINT}"# Create the OIDC provider in AWSaws iam create-open-id-connect-provider \ --url $OIDC_ISSUER \ --client-id-list sts.amazonaws.com \ --thumbprint-list $THUMBPRINT# 3. Create trust policyYOUR_AWS_ACCOUNT_ID=$(aws sts get-caller-identity | jq -r .Account)cat > gke-aws-trust-policy.json <<EOF{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${YOUR_AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_PROVIDER}:sub": "system:serviceaccount:default:gke-cross-cloud-sa", "${OIDC_PROVIDER}:aud": "sts.amazonaws.com" } } } ]}EOF# 4. Create IAM roleaws iam create-role \ --role-name gke-to-aws-role \ --assume-role-policy-document file://gke-aws-trust-policy.json# 5. Attach permissionsaws iam attach-role-policy \ --role-name gke-to-aws-role \ --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccessKubernetes Manifest:

Submit the manifest below to validate the Scenario 3.2, if authentication is working you will see success logs as shown below –

# scenario3-2-gke-to-aws.yamlapiVersion: v1kind: Podmetadata: name: gke-aws-test namespace: defaultspec: # gke-cross-cloud-sa SA is created in Scenario 3.1 above serviceAccountName: gke-cross-cloud-sa restartPolicy: Never containers: - name: aws-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir boto3 && \ python /app/test_aws_from_gke.py env: - name: AWS_ROLE_ARN # Replace ACCOUNT_ID with your AWS account ID value: "arn:aws:iam::YOUR_AWS_ACCOUNT_ID:role/gke-to-aws-role" - name: AWS_WEB_IDENTITY_TOKEN_FILE value: /var/run/secrets/tokens/token - name: AWS_REGION value: us-east-1 volumeMounts: - name: app-code mountPath: /app - name: aws-token mountPath: /var/run/secrets/tokens readOnly: true volumes: - name: app-code configMap: name: aws-test-code-gke - name: aws-token projected: sources: - serviceAccountToken: path: token expirationSeconds: 3600 audience: "sts.amazonaws.com" # must match your AWS OIDC provider audienceapiVersion: v1kind: ConfigMapmetadata: name: aws-test-code-gke namespace: defaultdata: test_aws_from_gke.py: | # Code will be provided belowTest Code (Python):

# test_aws_from_gke.pyimport boto3import sysdef test_aws_access(): """Test AWS S3 access from GKE using OIDC federation""" try: # SDK automatically uses OIDC credentials from environment variables s3_client = boto3.client('s3') # List buckets to verify access response = s3_client.list_buckets() print("✅ AWS Authentication successful!") print(f"Found {len(response['Buckets'])} S3 buckets:") for bucket in response['Buckets'][:5]: print(f" - {bucket['Name']}") # Get caller identity sts_client = boto3.client('sts') identity = sts_client.get_caller_identity() print(f"\n🔐 Authenticated as: {identity['Arn']}") return True except Exception as e: print(f"❌ AWS Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_aws_access() sys.exit(0 if success else 1)Success Logs:

If you see logs like below for the pod kubectl logs -f -n default gke-aws-test, it means the GKE to AWS Authentication worked.

✅ AWS Authentication successful!Found <number of buckets> S3 buckets: - bucket-1 - bucket-2 - ...🔐 Authenticated as: arn:aws:sts::YOUR_AWS_ACCOUNT_ID:assumed-role/gke-to-aws-role/botocore-session-<some random number>3.3 Authenticating to Azure from GKE

Setup Steps:

# Make sure you have done `az login` and set the subscription before proceeding# 1. Get GKE OIDC issuerPROJECT_ID=$(gcloud config get-value project)CLUSTER_LOCATION="us-central1" # Change to your cluster locationOIDC_ISSUER=$(curl -s https://container.googleapis.com/v1/projects/YOUR_PROJECT_ID/locations/us-central1/clusters/my-gke-cluster/.well-known/openid-configuration | jq -r .issuer)echo "OIDC Issuer: ${OIDC_ISSUER}"# 2. Create Azure AD applicationaz ad app create --display-name gke-to-azure-appAPP_ID=$(az ad app list \ --display-name gke-to-azure-app \ --query "[0].appId" -o tsv)echo "App ID: ${APP_ID}"# 3. Create service principalaz ad sp create --id $APP_ID# 4. Create federated credentialcat > gke-federated-credential.json <<EOF{ "name": "gke-federated-identity", "issuer": "${OIDC_ISSUER}", "subject": "system:serviceaccount:default:gke-cross-cloud-sa", "audiences": [ "api://AzureADTokenExchange" ]}EOFaz ad app federated-credential create \ --id $APP_ID \ --parameters gke-federated-credential.json# 5. Assign Azure permissionsSUBSCRIPTION_ID=$(az account show --query id -o tsv)az role assignment create \ --assignee $APP_ID \ --role "Storage Blob Data Reader" \ --scope "/subscriptions/$SUBSCRIPTION_ID"# 6. Create resource group (if not exists)az group create \ --name gke-cross-cloud \ --location eastus \ --subscription $SUBSCRIPTION_ID# 7. Create storage accountaz storage account create \ --name gkecrosscloud \ --resource-group gke-cross-cloud \ --location eastus \ --sku Standard_LRS \ --kind StorageV2 \ --subscription $SUBSCRIPTION_ID# 8. Create blob containeraz storage container create \ --name test-container \ --account-name gkecrosscloud \ --subscription $SUBSCRIPTION_ID \ --auth-mode login# you will need TENANT_ID belowTENANT_ID=$(az account show --query tenantId -o tsv)Kubernetes Manifest:

Submit the manifest below to validate the Scenario 3.3, if authentication is working you will see success logs as shown below –

# scenario3-3-gke-to-azure.yamlapiVersion: v1kind: Podmetadata: name: gke-azure-test namespace: defaultspec: # gke-cross-cloud-sa SA is created in Scenario 3.1 above serviceAccountName: gke-cross-cloud-sa restartPolicy: Never containers: - name: azure-test image: python:3.11-slim command: - sh - -c - | pip install --no-cache-dir azure-identity azure-storage-blob && \ python /app/test_azure_from_gke.py env: - name: AZURE_CLIENT_ID # Replace with your actual App ID value: "YOUR_APP_ID" - name: AZURE_TENANT_ID # Replace with your actual Tenant ID (get via: az account show --query tenantId -o tsv) value: "YOUR_TENANT_ID" - name: AZURE_FEDERATED_TOKEN_FILE value: /var/run/secrets/azure/tokens/azure-identity-token - name: AZURE_STORAGE_ACCOUNT # Replace with your actual storage account name value: "gkecrosscloud" volumeMounts: - name: app-code mountPath: /app - name: azure-token mountPath: /var/run/secrets/azure/tokens readOnly: true volumes: - name: app-code configMap: name: azure-test-code-gke - name: azure-token projected: sources: - serviceAccountToken: path: azure-identity-token expirationSeconds: 3600 audience: api://AzureADTokenExchangeapiVersion: v1kind: ConfigMapmetadata: name: azure-test-code-gke namespace: defaultdata: test_azure_from_gke.py: | # Code will be provided belowTest Code (Python):

# test_azure_from_gke.pyfrom azure.identity import DefaultAzureCredentialfrom azure.storage.blob import BlobServiceClientimport osimport sysdef test_azure_access(): """Test Azure Blob Storage access from GKE using federated credentials""" try: # DefaultAzureCredential automatically detects federated identity credential = DefaultAzureCredential() storage_account = os.environ.get('AZURE_STORAGE_ACCOUNT') account_url = f"https://{storage_account}.blob.core.windows.net" blob_service_client = BlobServiceClient( account_url=account_url, credential=credential ) # List containers containers = list(blob_service_client.list_containers()) print("✅ Azure Authentication successful!") print(f"Found {len(containers)} containers:") for container in containers[:5]: print(f" - {container.name}") return True except Exception as e: print(f"❌ Azure Authentication failed: {str(e)}") import traceback traceback.print_exc() return Falseif __name__ == "__main__": success = test_azure_access() sys.exit(0 if success else 1)Success Logs:

If you see logs like below for the pod kubectl logs -f -n default gke-azure-test, it means the GKE to Azure Authentication worked.

✅ Azure Authentication successful!Found 1 containers: - test-containerScenario 3 Cleanup

After testing Scenario 3 (GKE cross-cloud authentication), clean up the resources:

# ============================================# GCP Resources Cleanup# ============================================PROJECT_ID=$(gcloud config get-value project)GSA_EMAIL="gke-cross-cloud-sa@${PROJECT_ID}.iam.gserviceaccount.com"# Remove IAM policy bindinggcloud iam service-accounts remove-iam-policy-binding ${GSA_EMAIL} \ --role=roles/iam.workloadIdentityUser \ --member="serviceAccount:${PROJECT_ID}.svc.id.goog[default/gke-cross-cloud-sa]" \ --quiet# Delete GCS bucketgcloud storage rm -r gs://gke-cross-cloud# Remove project-level permissionsgcloud projects remove-iam-policy-binding ${PROJECT_ID} \ --member="serviceAccount:${GSA_EMAIL}" \ --role="roles/storage.admin"# Delete GCP service accountgcloud iam service-accounts delete ${GSA_EMAIL} --quiet# ============================================# AWS Resources Cleanup# ============================================# Get OIDC provider infoPROJECT_ID=$(gcloud config get-value project)CLUSTER_LOCATION="us-central1" # Update to your cluster locationOIDC_ISSUER=$(curl -s https://container.googleapis.com/v1/projects/YOUR_PROJECT_ID/locations/us-central1/clusters/my-gke-cluster/.well-known/openid-configuration | jq -r .issuer)OIDC_PROVIDER=$(echo $OIDC_ISSUER | sed -e "s/^https:\/\///")# Delete IAM role policy attachmentsaws iam detach-role-policy \ --role-name gke-to-aws-role \ --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess# Delete IAM roleaws iam delete-role --role-name gke-to-aws-role# Delete OIDC providerACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)aws iam delete-open-id-connect-provider \ --open-id-connect-provider-arn "arn:aws:iam::${ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"# ============================================# Azure Resources Cleanup# ============================================# Get App IDAPP_ID=$(az ad app list \ --display-name gke-to-azure-app \ --query "[0].appId" -o tsv)# Delete role assignmentsSUBSCRIPTION_ID=$(az account show --query id -o tsv)az role assignment delete \ --assignee $APP_ID \ --scope "/subscriptions/$SUBSCRIPTION_ID"# Delete federated credentialsaz ad app federated-credential delete \ --id $APP_ID \ --federated-credential-id gke-federated-identity# Delete service principalaz ad sp delete --id $APP_ID# Delete app registrationaz ad app delete --id $APP_ID# Delete resource groupaz group delete --name gke-cross-cloud --subscription $SUBSCRIPTION_ID --yes --no-wait# ============================================# Kubernetes Resources Cleanup# ============================================# Delete test podskubectl delete pod gke-gcp-test --force --ignore-not-foundkubectl delete pod gke-aws-test --force --ignore-not-foundkubectl delete pod gke-azure-test --force --ignore-not-found# Delete ConfigMapskubectl delete configmap gcp-test-code-gke --ignore-not-foundkubectl delete configmap aws-test-code-gke --ignore-not-foundkubectl delete configmap azure-test-code-gke --ignore-not-found# Delete service accountkubectl delete serviceaccount gke-cross-cloud-sa --ignore-not-foundSecurity Best Practices

1. Principle of Least Privilege

- Grant only the minimum permissions required

- Use resource-specific policies instead of broad access

- Regularly audit and review permissions

Example:

# ❌ BAD: Subscription-wide accessaz role assignment create \ --assignee $APP_ID \ --role "Storage Blob Data Reader" \ --scope "/subscriptions/$SUBSCRIPTION_ID"# ✅ GOOD: Resource-specific accessaz role assignment create \ --assignee $APP_ID \ --role "Storage Blob Data Reader" \ --scope $STORAGE_ACCOUNT_ID2. Namespace Isolation

- Use different service accounts per namespace

- Implement namespace-level RBAC

- Separate production and development workloads

3. Token Lifetime Management

- Use short-lived tokens (default is usually 1 hour)

- Enable automatic token rotation

- Monitor token usage and expiration

4. Audit Logging

- Enable cloud provider audit logs

- Monitor authentication attempts

- Set up alerts for suspicious activity

# Example: Add labels for better trackingapiVersion: v1kind: ServiceAccountmetadata: name: cross-cloud-sa namespace: production labels: app: my-app environment: production team: platform annotations: purpose: "Cross-cloud authentication for data pipeline"5. Network Security

- Use private endpoints where possible

- Implement egress filtering

- Use VPC/VNet peering for enhanced security

6. Credential Scanning

- Never commit workload identity configs to git

- Use tools like git-secrets, gitleaks

- Implement pre-commit hooks

Production Hardening

For production deployments, implement these additional security measures:

1. Strict Audience Claims

# ❌ Avoid wildcards or non-standard audiences"${OIDC_PROVIDER}:aud": "*"# ❌ Avoid using Azure audience for AWS (works but not best practice)"${OIDC_PROVIDER}:aud": "api://AzureADTokenExchange" # For AWS targets# ✅ Use cloud-specific audience matching# For AWS:"${OIDC_PROVIDER}:aud": "sts.amazonaws.com"# For Azure:"audiences": ["api://AzureADTokenExchange"]# For GCP:--allowed-audiences="//iam.googleapis.com/projects/PROJECT_NUMBER/locations/global/workloadIdentityPools/POOL/providers/PROVIDER"2. Exact Subject Matching

# ❌ Avoid broad patterns in production--attribute-condition="assertion.sub.startsWith('system:serviceaccount:')"# ✅ Use exact namespace and service account--attribute-condition="assertion.sub=='system:serviceaccount:production:app-sa'"3. Dedicated Identity Pools per Cluster

- Create separate Workload Identity Pools for each cluster

- Avoid sharing pools across environments

- Simplifies rotation and isolation

4. Resource-Scoped IAM

# ❌ Avoid project/subscription-wide rolesgcloud projects add-iam-policy-binding $PROJECT_ID \ --member="serviceAccount:sa@project.iam.gserviceaccount.com" \ --role="roles/storage.admin"# ✅ Use bucket-level or resource-level IAMgsutil iam ch serviceAccount:sa@project.iam.gserviceaccount.com:objectViewer \ gs://specific-bucket5. OIDC Provider Rotation

- Rotate cluster OIDC providers when cluster is recreated

- Update federated credentials accordingly

- Maintain backward compatibility during transition

6. Comprehensive Audit Logging

# AWS: Enable CloudTrailaws cloudtrail create-trail --name cross-cloud-audit# Azure: Enable Azure Monitoraz monitor diagnostic-settings create# GCP: Audit logs are enabled by defaultgcloud logging read "protoPayload.serviceName=sts.googleapis.com"7. Avoid Common Anti-Patterns

- ❌ Don’t use

roles/storage.adminwhen read access suffices - ❌ Don’t use

startsWith()conditions in production - ❌ Don’t share service accounts across namespaces

- ❌ Don’t use overly permissive audience claims

Performance Considerations

Token Caching

Cloud SDKs automatically cache tokens, but you can optimize:

# Reuse clients instead of creating new ones# Bad - creates new client each timedef bad_example(): for i in range(100): s3 = boto3.client('s3') s3.list_buckets()# Good - reuse clientdef good_example(): s3 = boto3.client('s3') for i in range(100): s3.list_buckets()Connection Pooling

Use connection pooling for better performance:

import boto3from botocore.config import Configconfig = Config( max_pool_connections=50, retries={'max_attempts': 3})s3 = boto3.client('s3', config=config)Comparison Matrix

| Feature | EKS (IRSA) | AKS (Workload Identity) | GKE (Workload Identity) |

|---|---|---|---|

| Setup Complexity | Medium | Low | Low |

| Native Integration | AWS | Azure | GCP |

| Cross-cloud Support | Via OIDC | Via Federated Credentials | Via WIF Pools |

| Token Injection | Automatic | Automatic (webhook) | Automatic |

| Token Lifetime | 1 hour (configurable) | 24 hours (default) | 1 hour (default) |

| Audience Customization | ✅ Yes | ✅ Yes | ✅ Yes |

| Pod Identity Webhook | Not required | Required | Not required |

| Annotation Required | Role ARN | Client ID | GSA Email |

| Native to K8s | Yes | Yes | Yes (GKE only) |

| Requires External JSON | No (AWS), Yes (cross-cloud) | No (Azure), Yes (cross-cloud) | No (GCP), Yes (cross-cloud) |

| STS Call Required | Yes | Yes | Yes |

| Most Complex Setup | Medium | Medium | High (for cross-cloud) |

Cloud-Specific Characteristics

| Characteristic | AWS | Azure | GCP |

|---|---|---|---|

| Validation Method | AssumeRoleWithWebIdentity | Federated credential match | Workload Identity Pool exchange |

| Token Exchange | Direct STS call | Entra ID token exchange | Multi-step (STS → SA impersonation) |

| Best Practice Audience | sts.amazonaws.com | api://AzureADTokenExchange | WIF Pool-specific |

| Audience Flexibility | Strict (validates aud claim) | Strict (must match federated credential) | Flexible (configured in pool provider) |

| Thumbprint Required | Yes (root CA) | No | No |

Migration Guide

From Static Credentials to Workload Identity

Step 1: Audit current credential usage

# Find all secrets with credentialskubectl get secrets --all-namespaces -o json | \ jq -r '.items[] | select(.type=="Opaque") | .metadata.name'Step 2: Set up workload identity (follow scenarios above)

Step 3: Deploy test pod with workload identity

Step 4: Validate access before removing static credentials

Step 5: Update application code to remove explicit credential loading

Step 6: Remove credential secrets

kubectl delete secret <credential-secret-name>Step 7: Monitor and verify in production

Conclusion

Cross-cloud authentication using workload identity provides a secure, scalable, and maintainable approach to multi-cloud Kubernetes deployments. By leveraging OIDC federation, you eliminate the risks associated with static credentials while gaining fine-grained access control and better auditability.

Key Takeaways:

- Always prefer workload identity over static credentials

- Use native integrations when available (IRSA for EKS, Workload Identity for AKS/GKE)

- Follow the principle of least privilege in IAM policies with resource-specific scopes

- Implement strict claim matching in production (exact

subandaudmatching) - Test thoroughly before production deployment

- Monitor and audit authentication patterns regularly with cloud-native logging

- Keep SDKs updated for the latest security patches

- Use dedicated identity pools per cluster in production

- Rotate OIDC providers when clusters are recreated

Additional Resources:

Final Cleanup

If you’re completely done with all scenarios and want to delete the Kubernetes clusters, refer to the Cluster Cleanup section in the prerequisites.

This guide was created to help platform engineers implement secure, passwordless authentication across multiple cloud providers in Kubernetes environments.

NotebookLM Book is here.

Leave a comment